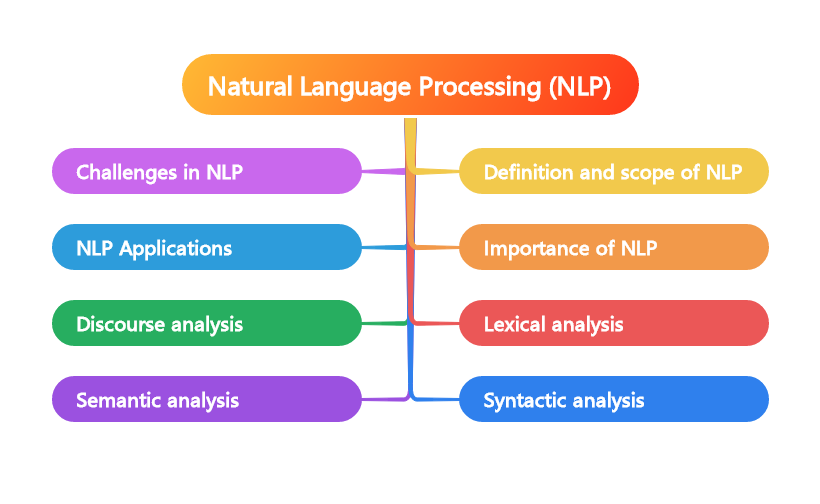

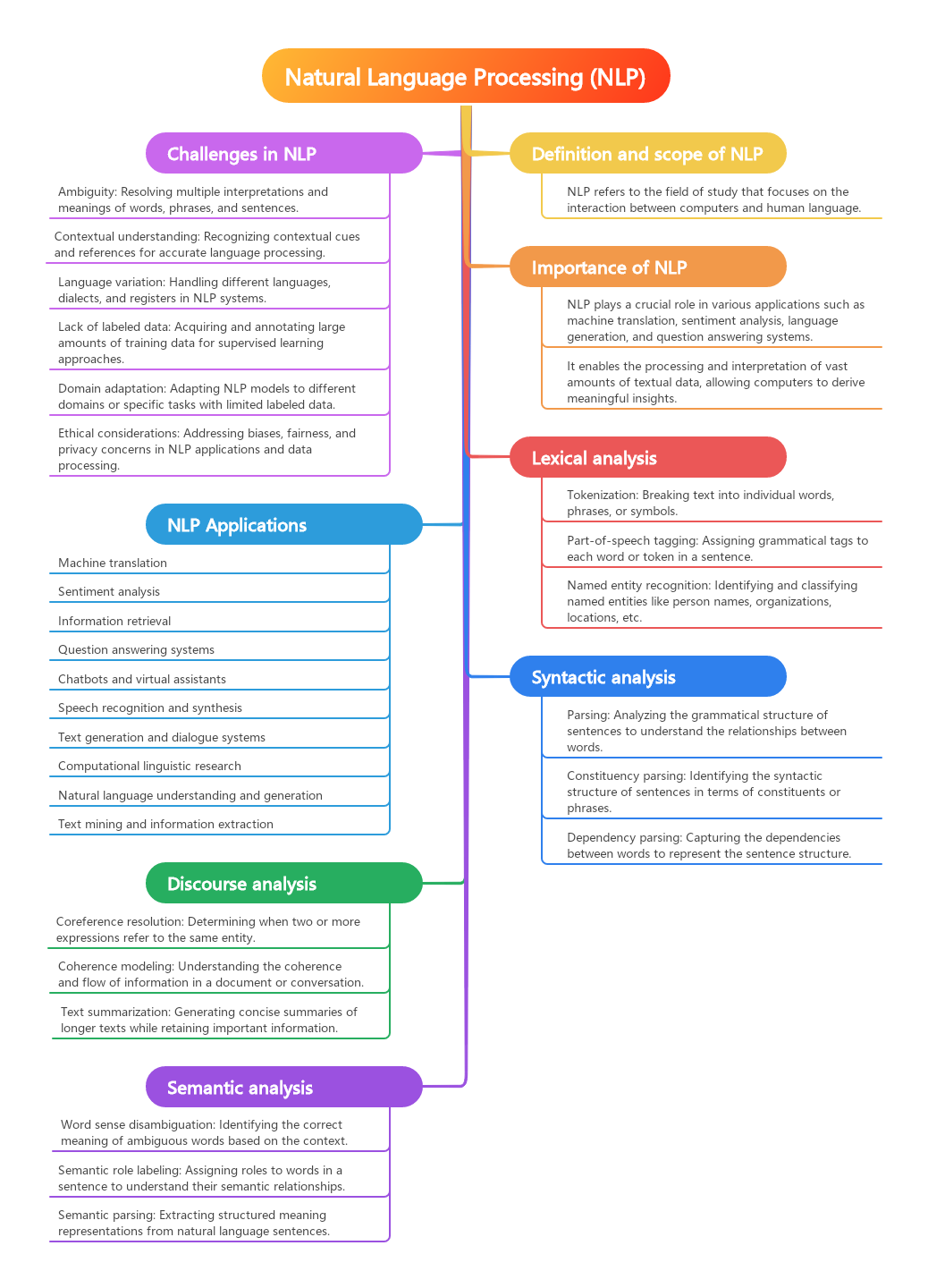

Natural Language Processing (NLP):

Explore how AI understands, interprets, and generates human language. This includes everything from chatbots to translation services and sentiment analysis.

Chapter 1: Introduction to Natural Language Processing

What is Natural Language Processing?

Natural Language Processing (NLP) is a branch of artificial intelligence that focuses on the interaction between computers and human language. It encompasses a wide range of tasks, from understanding and interpreting text to generating human-like responses.

At its core, NLP seeks to bridge the gap between human communication and computer processing. By teaching machines to understand, interpret, and generate human language, NLP enables a wide range of applications, from chatbots and virtual assistants to translation services and sentiment analysis.

One of the key challenges in NLP is the ambiguity and complexity of human language. Words can have multiple meanings, phrases can be interpreted in different ways, and context plays a crucial role in understanding the true meaning of a sentence. NLP algorithms must be able to navigate these complexities to accurately process and generate human language.

In recent years, advancements in deep learning and natural language processing techniques have led to significant improvements in NLP applications. Chatbots can now hold more engaging conversations, translation services can provide more accurate results, and sentiment analysis tools can better understand the emotions and opinions expressed in text.

For software professionals looking to delve into the world of NLP, mastering the fundamentals of natural language processing is essential. This includes understanding the basic principles of linguistics, familiarizing oneself with common NLP tasks and techniques, and gaining hands-on experience with popular NLP libraries and tools.

By mastering NLP, software professionals can unlock a world of possibilities, from building more intelligent and interactive applications to revolutionizing the way we communicate and interact with technology.History of Natural Language Processing

The History of Natural Language Processing

Natural Language Processing (NLP) has a rich history that dates back to the 1950s when the field was first conceived. The early days of NLP were marked by ambitious goals and limited technological capabilities. Researchers and scientists were fascinated by the idea of creating machines that could understand and generate human language, but progress was slow due to the complexity of language and the limitations of the available technology.

One of the first breakthroughs in NLP came in the 1960s with the development of ELIZA, a computer program created by Joseph Weizenbaum at MIT. ELIZA was designed to simulate a conversation with a psychotherapist by using simple pattern matching and substitution techniques. While ELIZA was not capable of true understanding or intelligence, it demonstrated the potential of NLP to create interactive and engaging software programs.

In the following decades, NLP continued to advance as researchers developed more sophisticated algorithms and techniques for processing and understanding language. The 1980s saw the rise of statistical NLP, which used probabilistic models and machine learning algorithms to analyze and generate text. This approach revolutionized the field by enabling computers to learn from data and make more accurate predictions about language.

The 21st century has brought even more rapid advancements in NLP, thanks to the availability of large datasets and powerful computational resources. Today, NLP is used in a wide range of applications, from chatbots and virtual assistants to translation services and sentiment analysis. Software professionals working in NLP have access to a wealth of tools and resources that make it easier than ever to develop innovative and effective language processing solutions.

As the field of NLP continues to evolve, software professionals must stay up-to-date on the latest developments and techniques to create cutting-edge solutions that push the boundaries of what is possible with natural language processing. By mastering the history and fundamentals of NLP, software professionals can unlock the full potential of this exciting and rapidly growing field.Applications of Natural Language Processing

In the fast-paced world of technology, natural language processing (NLP) has become an essential tool for software professionals across various industries. This subchapter delves into the diverse applications of NLP and how it can revolutionize the way we interact with technology.

One of the most common applications of NLP is in chatbots. These AI-powered conversational agents are capable of understanding and responding to human language, providing assistance and support to users in real-time. Chatbots can be integrated into websites, messaging platforms, and mobile apps, offering a seamless and personalized user experience.

Another key application of NLP is in translation services. With the ability to analyze and interpret multiple languages, NLP algorithms can automatically translate text from one language to another with impressive accuracy. This technology has transformed the way businesses communicate with global audiences, breaking down language barriers and facilitating cross-cultural interactions.

Sentiment analysis is another powerful application of NLP, allowing software professionals to gauge the emotions and opinions expressed in text data. By analyzing social media posts, customer reviews, and other forms of online content, businesses can gain valuable insights into customer sentiment, preferences, and trends. This information can be used to improve products and services, enhance marketing strategies, and drive business growth.

Overall, the applications of NLP are vast and varied, offering software professionals endless possibilities for innovation and advancement. By mastering the principles and techniques of natural language processing, professionals can unlock the full potential of AI in understanding, interpreting, and generating human language. Whether you are developing chatbots, translation services, or sentiment analysis tools, NLP is sure to play a crucial role in shaping the future of technology.

Chapter 2: Fundamentals of Natural Language Processing

Linguistics and NLP

Linguistics and NLP are two closely intertwined fields that play a crucial role in the development and implementation of natural language processing (NLP) technologies. Understanding the fundamentals of linguistics is essential for software professionals working in the NLP space, as it provides the necessary knowledge and insights to build effective and accurate language models.

Linguistics is the scientific study of language, encompassing the structure, meaning, and use of language in all its forms. By understanding the principles of linguistics, software professionals can create NLP algorithms that can accurately interpret and generate human language. This knowledge allows them to develop more advanced chatbots, translation services, sentiment analysis tools, and other NLP applications that can understand and respond to human language with a high degree of accuracy.

In the context of NLP, linguistics provides the framework for designing algorithms that can analyze and process language data in a way that mimics human language comprehension. This includes understanding syntax, semantics, pragmatics, and other linguistic concepts that are essential for building effective NLP systems.

By mastering the principles of linguistics and applying them to NLP, software professionals can create more advanced and sophisticated language models that can accurately interpret and generate human language. This knowledge is essential for developing cutting-edge NLP technologies that can revolutionize the way we interact with computers and machines.

In this subchapter, we will explore the relationship between linguistics and NLP, and how software professionals can leverage this knowledge to build more advanced and effective NLP systems. By understanding the fundamentals of linguistics and applying them to NLP, software professionals can unlock new possibilities in AI-driven language processing and revolutionize the way we communicate with machines.Text Preprocessing

Text preprocessing is a crucial step in natural language processing (NLP) that involves cleaning and preparing text data for further analysis. As software professionals working in the field of NLP, understanding the importance of text preprocessing is essential for building accurate and efficient language models.

The process of text preprocessing typically involves several key steps, including tokenization, lowercasing, removing stopwords, and stemming or lemmatization. Tokenization is the process of breaking down text into individual words or tokens, while lowercasing involves converting all words to lowercase to ensure consistency in the data. Removing stopwords, which are common words that do not carry much meaning, helps reduce noise in the text data. Stemming and lemmatization are techniques used to reduce words to their root form, making it easier for algorithms to understand and process the text.

In addition to these basic preprocessing steps, software professionals should also consider other techniques such as handling special characters, dealing with numbers, and addressing spelling errors. Furthermore, advanced preprocessing techniques like part-of-speech tagging and named entity recognition can also be used to extract more meaningful information from the text.

By investing time and effort in text preprocessing, software professionals can improve the accuracy and performance of their NLP models. Clean and well-prepared text data can lead to more accurate language understanding, sentiment analysis, and other NLP tasks. In this subchapter, we will explore the various text preprocessing techniques and best practices that software professionals can use to enhance their NLP projects and deliver more effective solutions in the field of natural language processing.Tokenization and Part-of-Speech Tagging

Tokenization and Part-of-Speech Tagging are two fundamental processes in Natural Language Processing (NLP) that play a crucial role in understanding and analyzing human language. In this subchapter, we will explore these concepts in depth and understand how they contribute to the overall NLP pipeline.

Tokenization is the process of breaking down a text into smaller units called tokens, which can be words, phrases, or symbols. This step is essential for various NLP tasks such as text classification, sentiment analysis, and machine translation. By tokenizing the text, we can analyze and process the data more effectively.

Part-of-Speech Tagging, on the other hand, is the process of assigning a specific part of speech to each token in a sentence. This helps in identifying the grammatical structure of the text and aids in tasks such as named entity recognition, information retrieval, and text summarization. By tagging each token with its respective part of speech, we can extract valuable information and insights from the text.

Understanding how tokenization and part-of-speech tagging work is crucial for software professionals working in the field of NLP. These processes form the foundation of many advanced NLP techniques and algorithms, and mastering them can significantly improve the performance of NLP models and applications.

In this subchapter, we will delve into the various tokenization techniques, such as word tokenization, sentence tokenization, and character tokenization. We will also explore different part-of-speech tagging algorithms, including rule-based tagging, statistical tagging, and deep learning-based tagging.

By the end of this subchapter, software professionals will have a comprehensive understanding of tokenization and part-of-speech tagging and how they can leverage these techniques to build more robust and accurate NLP systems.

Chapter 3: Natural Language Processing Techniques

Named Entity Recognition

Named Entity Recognition (NER) is a crucial task in Natural Language Processing (NLP) that involves identifying and categorizing named entities in text. Named entities are specific words or phrases that refer to real-world objects such as people, organizations, locations, dates, and more. By accurately recognizing and categorizing these entities, NER plays a key role in various NLP applications, including chatbots, translation services, and sentiment analysis.

In the context of NER, software professionals need to understand the importance of training machine learning models to recognize named entities effectively. This involves creating labeled datasets with examples of named entities and their corresponding categories. By feeding these datasets into machine learning algorithms, NER models can be trained to accurately identify and classify named entities in text.

There are various approaches to NER, including rule-based systems, statistical models, and deep learning techniques. Each approach has its strengths and weaknesses, and software professionals need to evaluate which method is best suited for their specific NLP tasks.

In this subchapter, software professionals will learn about the different techniques and tools available for Named Entity Recognition. They will explore how to preprocess text data, train NER models, and evaluate their performance. Additionally, they will discover practical tips and best practices for improving the accuracy and efficiency of NER systems in real-world applications.

By mastering Named Entity Recognition, software professionals can enhance the capabilities of their NLP systems and create more intelligent and effective solutions for understanding, interpreting, and generating human language.Sentiment Analysis

Sentiment analysis is a crucial aspect of natural language processing (NLP) that software professionals need to master in order to understand how AI interprets and generates human language. In this subchapter, we will delve into the intricacies of sentiment analysis and how it can be applied in various NLP applications.

Sentiment analysis, also known as opinion mining, involves the use of computational tools and algorithms to determine the sentiment or emotion expressed in a piece of text. This could range from positive, negative, or neutral sentiments, and can be applied to social media posts, customer reviews, news articles, and more.

One of the key challenges in sentiment analysis is the ambiguity and complexity of human language. Words or phrases that may seem positive in one context could be interpreted as negative in another. This is where machine learning techniques such as natural language processing and deep learning come into play, helping software professionals train models to accurately classify sentiment in text data.

By mastering sentiment analysis, software professionals can develop more effective chatbots that can understand and respond to user emotions, improve customer service by analyzing feedback and reviews, and enhance translation services by capturing the underlying sentiment of a text.

In this subchapter, we will explore various sentiment analysis techniques, including lexicon-based methods, machine learning algorithms, and deep learning models. We will also discuss the challenges and ethical considerations associated with sentiment analysis, and provide practical tips and best practices for implementing sentiment analysis in real-world NLP projects.

Overall, mastering sentiment analysis is essential for software professionals working in the field of NLP, as it enables them to create more intelligent and empathetic AI systems that can better understand and interact with human language.Topic Modeling

Topic Modeling is a crucial technique in Natural Language Processing (NLP) that allows software professionals to uncover hidden patterns and themes within a large collection of text data. By using algorithms to automatically identify topics or themes present in a corpus of documents, topic modeling helps in organizing, understanding, and extracting valuable insights from unstructured text data.

One of the most popular algorithms used for topic modeling is Latent Dirichlet Allocation (LDA), which assumes that each document is a mixture of a small number of topics and that each word in the document is attributable to one of those topics. By applying LDA to a collection of documents, software professionals can uncover the underlying topics that are prevalent in the data and analyze how these topics are distributed across different documents.

Another commonly used algorithm for topic modeling is Non-negative Matrix Factorization (NMF), which factorizes a matrix of term-document frequencies into two lower-dimensional matrices representing topics and document-topic distributions. NMF is particularly useful for cases where interpretability of topics is more important than statistical modeling.

In this subchapter, software professionals will learn how to implement and fine-tune topic modeling algorithms like LDA and NMF using popular NLP libraries such as Gensim and scikit-learn. They will also explore techniques for evaluating the quality of topic models, such as coherence scores and topic coherence.

By mastering topic modeling techniques, software professionals can enhance the capabilities of their NLP applications, including chatbots, translation services, and sentiment analysis tools. With the ability to automatically extract and organize topics from large volumes of text data, they can gain deeper insights and make more informed decisions based on the content of textual information.

Chapter 4: Deep Learning for Natural Language Processing

Word Embeddings

Word embeddings are a crucial concept in the field of Natural Language Processing (NLP) and play a key role in how artificial intelligence systems understand and interpret human language. In simple terms, word embeddings are a way to represent words as numerical vectors in a multi-dimensional space, where words with similar meanings are located closer to each other.

One of the most popular techniques for creating word embeddings is Word2Vec, which uses a neural network to learn the relationships between words in a large corpus of text. By training the model on a vast amount of text data, it can learn to predict the context in which a word is likely to appear, thereby capturing the semantic relationships between words.

Word embeddings have many practical applications in NLP, such as improving the performance of language models, sentiment analysis, and machine translation. For example, by using word embeddings, a chatbot can better understand user input and generate more relevant responses. Similarly, sentiment analysis models can benefit from word embeddings by capturing the underlying sentiment behind the words used in a text.

As a software professional working in the field of NLP, it is essential to have a solid understanding of word embeddings and how they can be leveraged to enhance the performance of AI systems that deal with human language. By mastering the concept of word embeddings, you can unlock a wide range of possibilities for developing innovative NLP applications that can revolutionize the way we interact with technology.Recurrent Neural Networks

Recurrent Neural Networks (RNNs) are a powerful type of neural network that is well-suited for sequential data such as language. In the context of Natural Language Processing (NLP), RNNs have proven to be highly effective for tasks such as language modeling, machine translation, sentiment analysis, and more.

One of the key advantages of RNNs is their ability to remember previous information in the sequence through the use of hidden states. This makes them particularly well-suited for tasks where context is important, such as language understanding and generation.

In NLP applications, RNNs are commonly used for tasks such as text classification, named entity recognition, and part-of-speech tagging. They can also be used to generate text, such as in language modeling or text generation tasks.

One of the challenges with traditional RNNs is the issue of vanishing gradients, which can make it difficult for the network to learn long-range dependencies in the data. To address this issue, more advanced versions of RNNs have been developed, such as Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), which are better able to capture long-term dependencies in the data.

Overall, RNNs are a versatile and powerful tool for NLP tasks, allowing software professionals to build sophisticated language processing systems that can understand, interpret, and generate human language with a high degree of accuracy. By mastering the intricacies of RNNs and their variants, software professionals can take their NLP applications to the next level and unlock a wide range of possibilities in the field of AI-driven language processing.Convolutional Neural Networks for NLP

Convolutional Neural Networks (CNNs) have revolutionized the field of Natural Language Processing (NLP) by providing a powerful tool for analyzing and extracting features from text data. In this subchapter, we will delve into the application of CNNs in NLP and explore how they can be used to improve the performance of various NLP tasks.

One of the key advantages of CNNs in NLP is their ability to capture local dependencies in text data through the use of convolutional filters. By applying these filters across different parts of the input text, CNNs can effectively learn patterns and features that are important for understanding the semantics of the text.

For tasks such as text classification, sentiment analysis, and named entity recognition, CNNs have been shown to outperform traditional machine learning models by a significant margin. The ability of CNNs to automatically learn feature representations from raw text data makes them particularly well-suited for tasks where manual feature engineering is difficult or time-consuming.

In this subchapter, we will walk you through the architecture of a typical CNN for NLP, including the use of convolutional layers, pooling layers, and fully connected layers. We will also discuss common strategies for training CNNs on text data, such as word embeddings and transfer learning.

By the end of this subchapter, you will have a solid understanding of how CNNs can be applied to a wide range of NLP tasks and how they can help you build more accurate and efficient NLP models. Whether you are a seasoned software professional or just getting started in the field of NLP, this subchapter will equip you with the knowledge and tools you need to master the use of CNNs in NLP.

Chapter 5: Building NLP Applications

Chatbots and Virtual Assistants

Chatbots and virtual assistants have become increasingly popular in recent years, revolutionizing the way we interact with technology. These AI-powered tools are designed to understand and respond to human language, making them invaluable for a wide range of applications.

Chatbots, in particular, are used in customer service, marketing, and even healthcare to provide instant, personalized responses to user queries. They are often built using natural language processing (NLP) techniques, which enable them to understand the nuances of human language and generate appropriate responses.

Virtual assistants, on the other hand, are more advanced versions of chatbots that can perform a wide range of tasks, from scheduling appointments to ordering groceries. They are designed to mimic human conversation more closely, using NLP algorithms to generate responses that are indistinguishable from those of a human.

For software professionals working in the field of NLP, understanding how chatbots and virtual assistants work is essential. This subchapter will explore the various techniques and algorithms used to build these AI-powered tools, as well as the challenges and opportunities they present.

From sentiment analysis to language translation, chatbots and virtual assistants are transforming the way we interact with technology. By mastering the principles of NLP, software professionals can unlock the full potential of these tools and create innovative solutions that enhance the user experience.Machine Translation Services

In the world of Natural Language Processing (NLP), one of the most prominent applications is machine translation services. These services have revolutionized the way we communicate across different languages, enabling seamless communication and collaboration on a global scale.

Machine translation services utilize advanced algorithms and artificial intelligence to automatically translate text from one language to another. This process involves analyzing the structure and context of the text, identifying key phrases and words, and generating a translated version that is accurate and contextually appropriate.

There are several popular machine translation services available today, including Google Translate, Microsoft Translator, and DeepL. These services are constantly evolving and improving, thanks to the advancements in NLP technology.

For software professionals, understanding how machine translation services work and how to integrate them into their applications can be extremely beneficial. By leveraging these services, developers can create multilingual applications that cater to a global audience, breaking down language barriers and reaching a wider user base.

When utilizing machine translation services, it is important to consider factors such as accuracy, speed, and language support. Different services may excel in certain areas, so it is essential to choose the right one based on the specific requirements of your project.

Overall, machine translation services play a crucial role in the field of NLP, offering software professionals powerful tools to enhance communication and accessibility in the digital age. By mastering these services, developers can unlock new possibilities for their applications and contribute to a more connected and inclusive world.Sentiment Analysis Tools

Sentiment analysis tools are invaluable resources for software professionals working in the field of natural language processing (NLP). These tools utilize machine learning algorithms to analyze text data and determine the sentiment or emotion expressed within it. By understanding the sentiment behind a piece of text, software professionals can gain valuable insights into how users feel about a particular product, service, or topic.

There are several popular sentiment analysis tools available to software professionals, each with its own unique features and capabilities. One such tool is the Natural Language Toolkit (NLTK), a powerful library for building NLP applications in Python. NLTK provides a variety of tools for text processing, including sentiment analysis, making it a popular choice for developers working on NLP projects.

Another popular sentiment analysis tool is the Stanford NLP library, which offers a wide range of NLP functionality, including sentiment analysis. This library is known for its accuracy and performance, making it a top choice for software professionals looking to incorporate sentiment analysis into their applications.

In addition to these tools, there are also several sentiment analysis APIs available that provide pre-trained models for analyzing sentiment in text data. These APIs can be easily integrated into existing applications, making it quick and easy for software professionals to add sentiment analysis capabilities to their projects.

Overall, sentiment analysis tools are essential for software professionals working in the field of NLP. By leveraging these tools, developers can gain valuable insights into user sentiment and improve the overall user experience of their applications. Whether using libraries like NLTK or Stanford NLP, or leveraging sentiment analysis APIs, software professionals have a variety of tools at their disposal to enhance their NLP projects.

Chapter 6: Evaluating NLP Models

Metrics for NLP Performance

In the world of Natural Language Processing (NLP), measuring performance is crucial to understanding how well a system is processing and understanding human language. Metrics play a vital role in evaluating the effectiveness of NLP algorithms and models. As software professionals working in the field of NLP, it is essential to be familiar with the key metrics used to assess the performance of NLP systems.

One of the most common metrics used in NLP is accuracy, which measures the percentage of correctly predicted labels or classifications. While accuracy is a good starting point, it may not always provide a complete picture of performance. Other important metrics to consider include precision, recall, and F1-score. Precision measures the proportion of true positive predictions among all positive predictions, while recall measures the proportion of true positive predictions among all actual positive instances. The F1-score is the harmonic mean of precision and recall, providing a balanced measure of a model’s performance.

In addition to these traditional metrics, there are specialized metrics used for specific NLP tasks. For example, in sentiment analysis, metrics like Mean Absolute Error (MAE) or Root Mean Squared Error (RMSE) can be used to evaluate the accuracy of sentiment predictions. In machine translation tasks, metrics like BLEU (Bilingual Evaluation Understudy) and METEOR (Metric for Evaluation of Translation with Explicit Ordering) are commonly used to assess the quality of translations.

Understanding and interpreting these metrics is essential for optimizing NLP models and improving their performance. By leveraging these metrics effectively, software professionals can fine-tune their NLP systems to achieve higher levels of accuracy and efficiency in tasks such as chatbots, translation services, and sentiment analysis.Cross-Validation and Hyperparameter Tuning

Cross-validation and hyperparameter tuning are crucial aspects of building robust and effective natural language processing (NLP) models. In this subchapter, we will delve into the importance of these techniques and how they can significantly improve the performance of your NLP models.

Cross-validation is a method used to evaluate the performance of a machine learning model by training and testing it on multiple subsets of the data. This helps to ensure that the model is not overfitting to the training data and can generalize well to unseen data. In the context of NLP, cross-validation is particularly important due to the complexity and variability of language data.

Hyperparameter tuning involves selecting the optimal values for the parameters that control the learning process of a machine learning model. This process is essential for maximizing the performance of your NLP models and can significantly impact their accuracy and efficiency.

By combining cross-validation with hyperparameter tuning, software professionals can fine-tune their NLP models to achieve the best possible performance. This iterative process allows for the identification of the most effective parameters for a given dataset, leading to improved accuracy and generalization capabilities.

In this subchapter, we will explore different techniques for cross-validation and hyperparameter tuning, including grid search, random search, and Bayesian optimization. We will also discuss best practices for implementing these techniques in your NLP projects and provide practical examples to help you get started.

By mastering cross-validation and hyperparameter tuning, software professionals can build more reliable and accurate NLP models that meet the needs of their users and stakeholders. These techniques are essential for staying ahead in the rapidly evolving field of natural language processing and ensuring the success of your AI projects.Ethical Considerations in NLP

In the field of Natural Language Processing (NLP), ethical considerations play a crucial role in ensuring that the technology is used responsibly and ethically. As software professionals working in NLP, it is important to be mindful of the potential ethical implications of the algorithms and models we develop.

One of the key ethical considerations in NLP is bias. Bias can manifest in various forms, including gender bias, racial bias, and cultural bias. It is important to be aware of these biases and take steps to mitigate them in our NLP systems. This could involve ensuring that training data is diverse and representative of the population, as well as regularly auditing and monitoring our models for bias.

Another ethical consideration in NLP is privacy. When working with sensitive data, such as personal conversations or medical records, it is important to prioritize the privacy and security of the data. This may involve implementing encryption, access controls, and anonymization techniques to protect the data from unauthorized access.

Transparency is also an important ethical consideration in NLP. It is important to be transparent about how NLP systems work, including the data they use, the algorithms they employ, and the decisions they make. This transparency can help build trust with users and stakeholders and ensure that our NLP systems are being used in a fair and accountable manner.

Ultimately, as software professionals in the field of NLP, it is our responsibility to uphold high ethical standards in the development and deployment of NLP systems. By being mindful of bias, prioritizing privacy, and promoting transparency, we can help ensure that NLP technology is used in a responsible and ethical manner.

Chapter 7: Challenges and Future Trends in NLP

Common Challenges in NLP

In the fast-evolving field of Natural Language Processing (NLP), software professionals often face a myriad of challenges that can hinder the development and implementation of successful NLP solutions. In this subchapter, we will explore some of the most common challenges encountered in NLP and discuss strategies for overcoming them.

One of the primary challenges in NLP is the ambiguity and complexity of human language. Natural language is inherently nuanced and context-dependent, making it difficult for machines to accurately interpret and generate text. This challenge is further compounded by the vast array of languages, dialects, and slang that exist in the world.

Another common challenge in NLP is the lack of labeled training data. Machine learning algorithms rely heavily on high-quality labeled data to effectively learn patterns and relationships within text. However, obtaining large amounts of accurately labeled data can be a time-consuming and costly process, especially for niche languages or specialized domains.

Additionally, the issue of bias in NLP models is a growing concern within the field. Biases present in training data, whether intentional or unintentional, can lead to unfair or discriminatory outcomes in NLP applications. Software professionals must be vigilant in identifying and mitigating bias in their NLP models to ensure ethical and equitable results.

Finally, the rapid pace of technological advancements in NLP presents a challenge in staying up-to-date with the latest tools, techniques, and research. As new algorithms and methodologies are continuously being developed, software professionals must actively engage in ongoing learning and professional development to remain competitive in the field of NLP.

By understanding and addressing these common challenges in NLP, software professionals can enhance their ability to develop robust and effective NLP solutions that meet the needs of modern society.Future Trends in Natural Language Processing

As technology continues to advance at a rapid pace, the field of Natural Language Processing (NLP) is also evolving with new trends that are shaping the future of the industry. In this subchapter, we will explore some of the key trends that software professionals working in NLP should be aware of.

One of the most significant trends in NLP is the growing importance of deep learning techniques. Deep learning algorithms, such as neural networks, have shown great promise in improving the accuracy and performance of NLP systems. By leveraging these powerful tools, software professionals can create more sophisticated NLP applications that can understand, interpret, and generate human language with greater precision.

Another trend that is shaping the future of NLP is the rise of multimodal NLP systems. These systems combine text with other forms of data, such as images or audio, to create more comprehensive and context-aware NLP applications. By incorporating multiple modalities, software professionals can enhance the capabilities of NLP systems, enabling them to better understand and respond to human language in a variety of contexts.

Furthermore, the increasing focus on ethical and responsible AI is also impacting the future of NLP. Software professionals working in NLP must be mindful of the potential biases and ethical implications of the data and algorithms they use. By incorporating ethical considerations into the design and development of NLP systems, professionals can help ensure that their applications are fair, transparent, and accountable.

Overall, the future of NLP is bright, with exciting trends and developments on the horizon. By staying informed and adapting to these trends, software professionals can continue to push the boundaries of what is possible with NLP and create innovative applications that enhance the way we interact with technology.Ethical and Privacy Concerns in NLP

As software professionals delve into the world of Natural Language Processing (NLP), they must also consider the ethical and privacy concerns that come with working in this field. NLP technology has the power to collect, analyze, and interpret vast amounts of human language data, raising important questions about how this data should be handled.

One major ethical concern in NLP is the issue of bias. As NLP models are trained on large datasets of human language, they may inadvertently learn and perpetuate biases present in the data. This can lead to discriminatory outcomes in areas such as hiring practices, language translation, and sentiment analysis. Software professionals must be vigilant in recognizing and mitigating bias in their NLP models to ensure fair and equitable results.

Privacy is another critical concern when working with NLP technology. As NLP systems process and store sensitive personal information, there is a risk of data breaches and privacy violations. Software professionals must implement robust security measures to protect the privacy of users’ data and comply with regulations such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA).

Transparency and accountability are key principles in addressing ethical and privacy concerns in NLP. Software professionals should be transparent about how their NLP systems work, including the data sources used, the algorithms employed, and the potential biases present. Additionally, they must establish mechanisms for users to access, correct, and delete their personal data to uphold privacy rights.

By prioritizing ethical considerations and privacy protections in their NLP projects, software professionals can build trust with users and stakeholders and contribute to the responsible development of AI technologies.

Chapter 8: Case Studies in Natural Language Processing

NLP in Healthcare

Natural Language Processing (NLP) has made significant strides in transforming the healthcare industry by enabling software professionals to develop innovative solutions for improving patient care, streamlining operations, and advancing medical research. From analyzing patient data to enhancing communication between healthcare providers and patients, NLP has the potential to revolutionize the way healthcare is delivered.

One of the key applications of NLP in healthcare is in clinical documentation. By automating the process of transcribing and analyzing clinical notes, NLP can help healthcare professionals save time and improve the accuracy of medical records. This not only leads to more efficient patient care but also enables healthcare organizations to better track and evaluate treatment outcomes.

Another important use case for NLP in healthcare is in disease diagnosis and prediction. By analyzing unstructured data from medical records, lab reports, and other sources, NLP algorithms can help identify patterns and trends that may indicate the presence of certain diseases or conditions. This can lead to earlier detection and intervention, ultimately improving patient outcomes.

Additionally, NLP can be used to enhance patient engagement and communication. Chatbots powered by NLP technology can provide patients with personalized information, answer questions, and even assist with scheduling appointments or refilling prescriptions. This not only improves the patient experience but also helps healthcare providers deliver more efficient and effective care.

Overall, the integration of NLP in healthcare has the potential to revolutionize the industry, making it more efficient, accurate, and patient-centered. Software professionals who specialize in NLP have a unique opportunity to drive innovation in healthcare and make a lasting impact on the lives of patients around the world.NLP in Finance

In the realm of finance, Natural Language Processing (NLP) has emerged as a powerful tool for software professionals looking to streamline processes, improve accuracy, and enhance overall customer experience. NLP in finance involves the use of artificial intelligence to understand, interpret, and generate human language in the context of financial data and transactions.

One key application of NLP in finance is sentiment analysis, which involves analyzing text data to determine the sentiment or emotion behind it. This can be extremely valuable in the finance industry, where understanding customer sentiment can help financial institutions make informed decisions about investments, marketing strategies, and customer service.

Another important use case for NLP in finance is in the realm of chatbots and virtual assistants. These tools can help financial institutions provide personalized customer service, answer inquiries, and even assist with basic transactions. By leveraging NLP technology, these chatbots can understand natural language queries and provide accurate and relevant responses in real-time.

NLP can also be used in finance for tasks such as document summarization, information extraction, and fraud detection. By automatically summarizing lengthy financial reports, extracting key information from documents, and identifying potentially fraudulent activities, NLP can help financial professionals save time and make more informed decisions.

Overall, NLP in finance holds immense potential for software professionals looking to revolutionize the way financial services are delivered and consumed. By mastering the principles and techniques of NLP, software professionals can unlock new possibilities for enhancing customer experiences, improving operational efficiency, and driving business success in the ever-evolving world of finance.NLP in Social Media Analysis

Social media has become a treasure trove of data for businesses looking to understand their customers better and make more informed decisions. Natural Language Processing (NLP) plays a crucial role in analyzing this vast amount of unstructured text data.

By harnessing the power of NLP, software professionals can extract valuable insights from social media conversations, comments, reviews, and posts. NLP algorithms can help identify trends, sentiment, and user preferences, enabling businesses to tailor their products and services to meet customer needs more effectively.

One of the key applications of NLP in social media analysis is sentiment analysis. By using NLP techniques, software professionals can automatically classify social media posts as positive, negative, or neutral, allowing businesses to gauge customer satisfaction and sentiment towards their brand in real-time. This information can then be used to improve customer service, marketing strategies, and product development.

Another important aspect of NLP in social media analysis is topic modeling. By applying NLP algorithms, software professionals can identify the most discussed topics on social media platforms, helping businesses stay ahead of trends and understand what their customers are talking about.

Overall, NLP in social media analysis empowers software professionals to make data-driven decisions based on the vast amount of text data available on social media platforms. By mastering NLP techniques, software professionals can unlock valuable insights that can drive business growth and success in today’s digital age.

Chapter 9: Resources for Software Professionals in NLP

Online Courses and Tutorials

In today’s fast-paced world, technology is constantly evolving, and as software professionals, it is crucial to stay updated on the latest trends and advancements in the field. One way to enhance your skills and knowledge in Natural Language Processing (NLP) is by taking online courses and tutorials.

Online courses and tutorials offer a convenient and flexible way to learn new concepts and techniques in NLP. Whether you are a beginner looking to get started in the field or an experienced professional seeking to deepen your understanding, there are courses available for every skill level.

These courses cover a wide range of topics in NLP, including text analysis, sentiment analysis, machine translation, and more. They are taught by industry experts and academics who provide valuable insights and practical tips to help you master the intricacies of NLP.

One of the key benefits of online courses and tutorials is the ability to learn at your own pace. You can study when it is most convenient for you, whether that is early in the morning or late at night. This flexibility allows you to balance your professional and personal commitments while still advancing your skills in NLP.

Additionally, online courses often include hands-on projects and assignments that allow you to apply what you have learned in a real-world context. This practical experience is invaluable for honing your skills and building a strong foundation in NLP.

By taking advantage of online courses and tutorials, software professionals can stay ahead of the curve in the rapidly evolving field of NLP. Whether you are looking to enhance your existing skills or break into the field, online courses are a valuable resource for mastering NLP and advancing your career.NLP Libraries and Tools

In the world of Natural Language Processing (NLP), having access to the right libraries and tools can make a significant difference in the efficiency and accuracy of your projects. As a software professional diving into the realm of NLP, it is crucial to familiarize yourself with the various libraries and tools available to streamline your workflow and enhance the performance of your applications.

One of the most popular NLP libraries is NLTK (Natural Language Toolkit), a comprehensive platform that provides easy-to-use interfaces for over 50 corpora and lexical resources, as well as robust tools for text processing and analysis. Another widely used library is spaCy, which offers pre-trained models for various NLP tasks such as named entity recognition, part-of-speech tagging, and dependency parsing.

For more advanced NLP tasks, deep learning frameworks such as TensorFlow and PyTorch are indispensable tools. These frameworks allow you to build and train neural networks for tasks like machine translation, sentiment analysis, and text generation. Additionally, libraries like Gensim and Word2Vec are valuable for word embedding and semantic analysis.

When it comes to sentiment analysis and text classification, tools like VADER (Valence Aware Dictionary and sEntiment Reasoner) and TextBlob provide out-of-the-box solutions for analyzing and categorizing text based on sentiment and emotion. These tools can be easily integrated into your applications to extract valuable insights from textual data.

Overall, mastering NLP libraries and tools is essential for software professionals looking to harness the power of AI in understanding, interpreting, and generating human language. By leveraging the right tools and libraries, you can unlock the full potential of NLP in applications ranging from chatbots to translation services and sentiment analysis.NLP Research Papers and Journals

In the world of Natural Language Processing (NLP), staying up-to-date with the latest research papers and journals is crucial for software professionals looking to master this cutting-edge technology. This subchapter will explore some of the most influential and groundbreaking research papers and journals in the field of NLP.

One of the most well-known journals in the NLP community is the Association for Computational Linguistics (ACL) journal, which publishes research on a wide range of topics related to language processing. Some of the most cited papers in the field have been published in this prestigious journal, making it a must-read for anyone looking to stay informed on the latest developments in NLP.

Another important journal in the NLP community is the Journal of Artificial Intelligence Research (JAIR), which features research on a variety of topics related to artificial intelligence, including NLP. This journal is known for publishing high-quality research papers that push the boundaries of what is possible in the field of NLP.

In addition to journals, there are also a number of influential research papers that have had a significant impact on the field of NLP. Some notable examples include “Attention is All You Need” by Vaswani et al., which introduced the transformer model that has revolutionized NLP, and “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” by Devlin et al., which introduced the BERT model that has become a cornerstone of many NLP applications.

By staying informed on the latest research papers and journals in the field of NLP, software professionals can gain valuable insights and stay ahead of the curve in this rapidly evolving field. Whether you are interested in chatbots, translation services, sentiment analysis, or any other aspect of NLP, keeping up with the latest research is essential for mastering this exciting technology.